.jpg)

Top 5 AI Powered Workflows for Incident Response (Q4 2025)

Security operations teams are drowning in alerts, logs, threat feeds, and a myriad of tools: SIEM dashboards, threat intelligence portals, identity management consoles, email gateways, and more. Each system is essential, but juggling them leads to constant context switching and slow response times.

Kindo’s AI driven security actions are built to break that cycle by automating and optimizing incident response tasks that span across these platforms. In this post, we show five real SecOps workflows that cut through the noise and reduce manual toil, from assembling incident timelines to orchestrating remediation scripts:

• Create a human readable incident timeline from raw logs.

• Bulk enrich and prioritize indicators of compromise from a list.

• Monitor login activity for anomalies across identity platforms.

• Investigate phishing emails for malicious attachments using an LLM.

• Automatically generate response scripts for remediation.

Each example shows how an AI powered chat interface can simplify and accelerate incident response, retrieving information, analyzing data, and even executing multi-step actions with a single prompt. Let’s dive in.

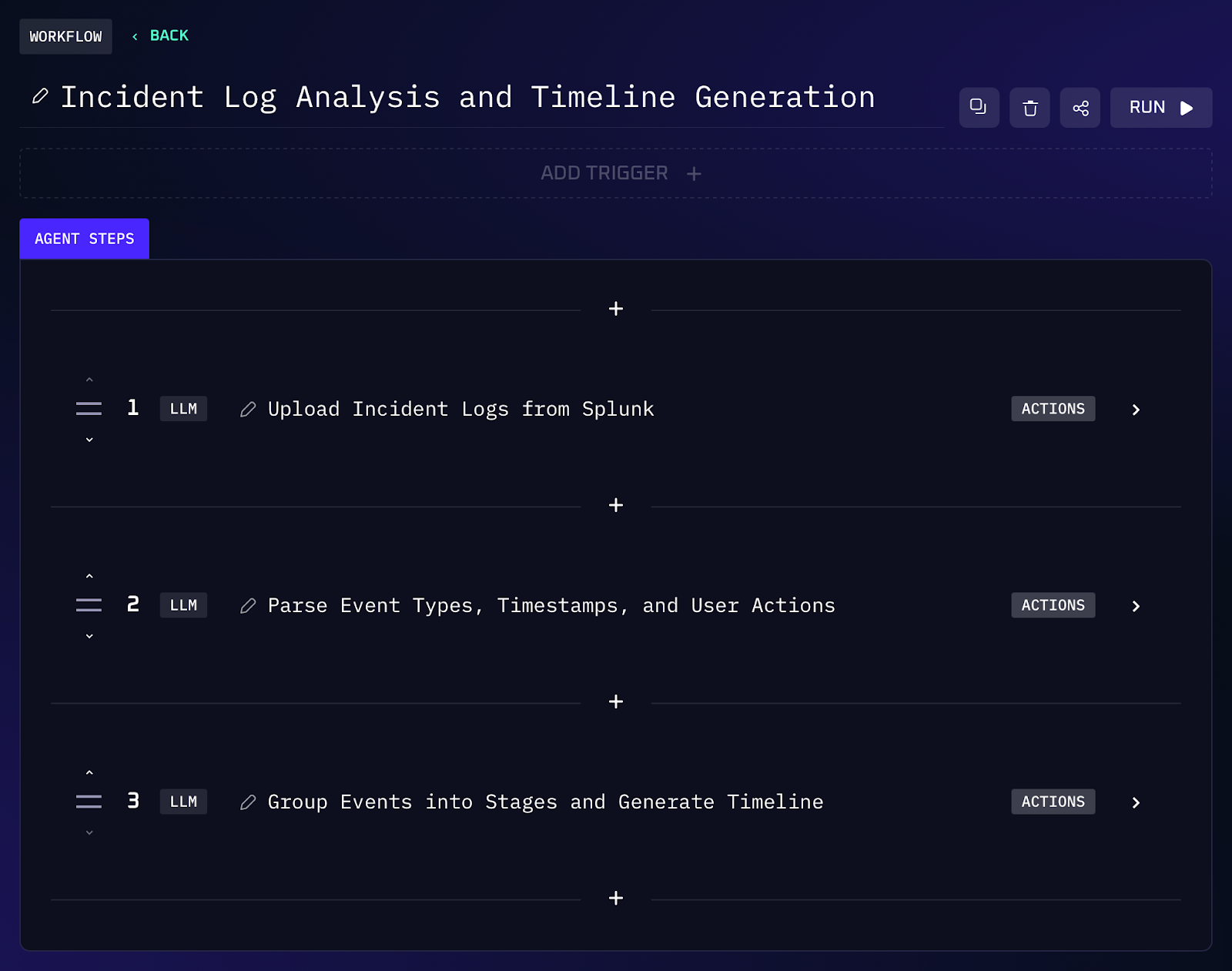

1. Generate an Incident Response Timeline from Logs

When an incident occurs, one of the first tasks is reconstructing what happened and when. Normally, an analyst would export logs from a SIEM like Splunk and manually sift through events to piece together a narrative. This can involve hours of filtering by event types, correlating timestamps, and categorizing actions into incident phases. Kindo’s AI workflows can eliminate that drudgery. For example, you could ask: “Here are the Splunk logs from the incident, parse them by event type and timeline stage, and give me a summary of key events with timestamps.” The assistant will quickly parse the raw log data, group events into standard incident stages (detection, containment, eradication, recovery), and output a concise timeline of the incident with each key event and its duration.

Workflow Steps (Incident Timeline Assembly)

1. The agent integrates with Splunk (for example) or ingests the exported log file containing all incident related events (authentication logs, alerts, system events, etc.).

2. Kindo’s AI parses the log entries and identifies event types, timestamps, and user or system actions. It automatically categorizes events into the appropriate incident response stages – for example, flagging the initial detection (first alerts or signs of compromise), actions taken to contain the threat, eradication steps (like malware removal), and recovery events (system restore or closing the incident).

3. The agent outputs a human readable timeline that lists the sequence of events grouped by stage. For instance: “Detection: 14:32 GMT – IDS alert triggered on server X (malware signature); Containment: 14:45 GMT – User account ‘jsmith’ disabled, infected host isolated from network; Eradication: 15:10 GMT – Malware removed from host, forensic image taken; Recovery: 15:30 GMT – Patched vulnerable service, restored connectivity.” This gives the team an immediate narrative of the incident without manual log diving.

Value of Automation

By automatically stitching together logs into a coherent story, engineers and responders get instant situational awareness during an incident. There’s no need to manually comb through thousands of log lines or pivot between tools to build the timeline, the information is assembled for you in seconds. This not only saves valuable time (speeding up incident analysis), but also ensures no big event is overlooked.

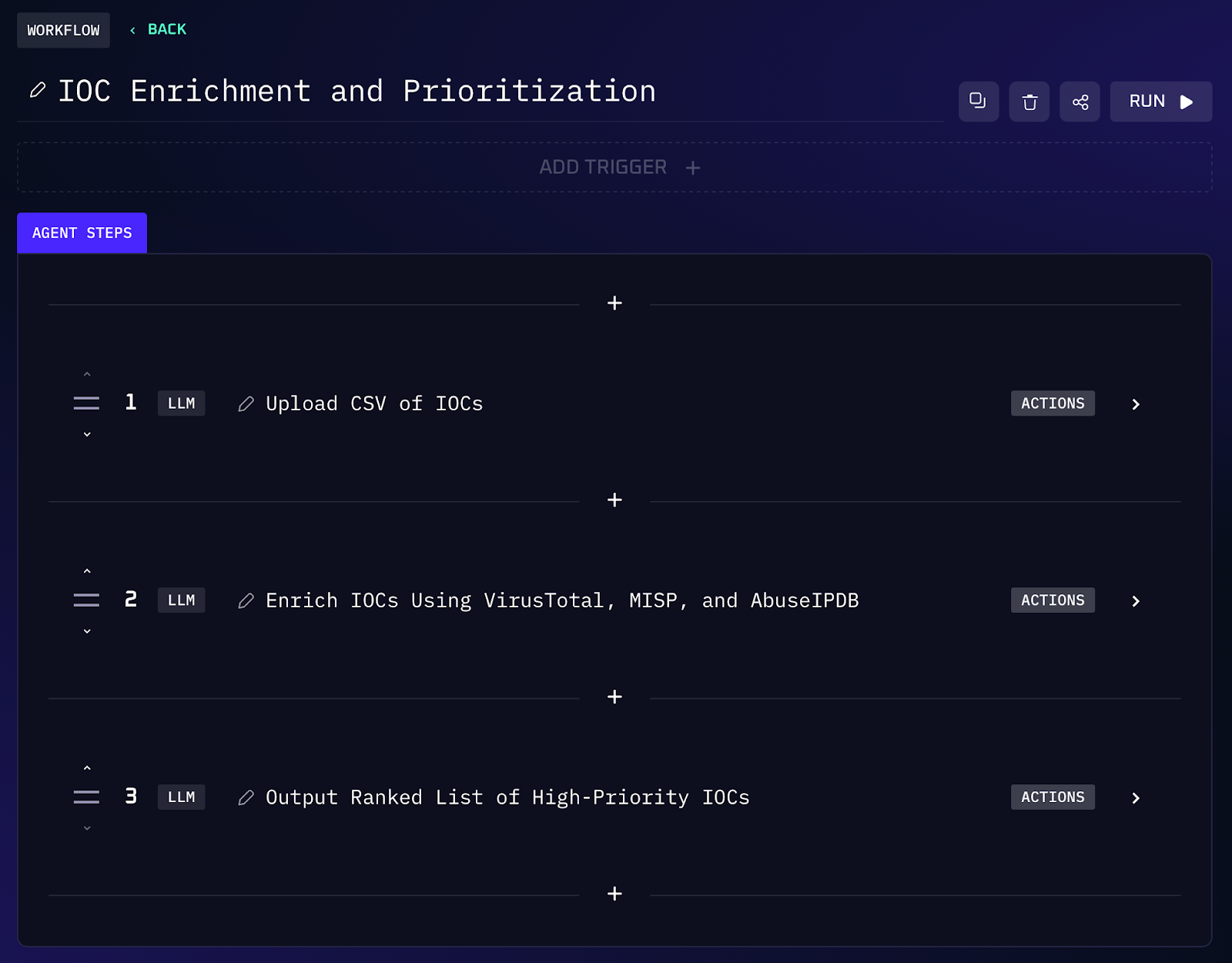

2. Mass IOC Look Up and Prioritization

After detecting a threat, analysts often gather a list of indicators of compromise (IOCs), IP addresses, file hashes, domains, to investigate. Checking each IOC against threat intelligence sources is tedious if done one by one. An AI agent can massively accelerate this by taking a bulk list (for example, a CSV of IOCs) and enriching each item in parallel. For instance, you might prompt: “Take this list of IOC hashes and URLs, look them up on VirusTotal, MISP, and AbuseIPDB, and tell me which are the highest priority.” The assistant will query multiple threat intel databases for each indicator, compile the findings (malicious vs benign, threat scores, related tags), and then output a ranked list of IOCs based on risk.

Workflow Steps (IOC Enrichment & Ranking)

1. The agent accepts an uploaded list of IOCs (from a CSV or incident report) which may include file hashes, URLs, and IP addresses. It then calls integrated threat intelligence APIs like VirusTotal (for malware file/hash and URL reputation scores), MISP (community threat sharing data), and AbuseIPDB (IP abuse reports).

2. For each indicator, the AI compiles the retrieved data: for example, VirusTotal might return a malware detection count or classification, MISP might provide threat actor or campaign context tags, and AbuseIPDB might indicate if an IP has a history of malicious activity. The agent aggregates these details for every IOC.

3. Next, the AI analyzes and prioritizes the IOCs. It could assign a composite severity score or simply flag which indicators appear in multiple sources with high threat confidence. It tags each IOC by category (e.g. marking an IP or domain as associated with phishing etc., if such tags appear in the intel).

4. The agent outputs a ranked list, from highest priority to lowest. For example: “1. malware.exe (SHA256: <hash>) - High risk malware hash (50/70 AV engines flagged on VirusTotal; associated with ransomware campaign); 2. baddomain.com – Phishing domain (in 2 threat feeds, active within last week); …”. This lets the team focus immediately on the most dangerous indicators first.

Value of Automation

By automating IOC lookups and scoring, what normally takes an analyst hours of cross-referencing is done in moments. The security team gets a unified view of threat intelligence without logging into multiple portals or copying data between spreadsheets. High risk indicators are surfaced right away, reducing the chance that a high risk IOC gets overlooked in the investigation. This leads to faster containment of threats, as responders can prioritize blocking or monitoring the most harmful indicators promptly.

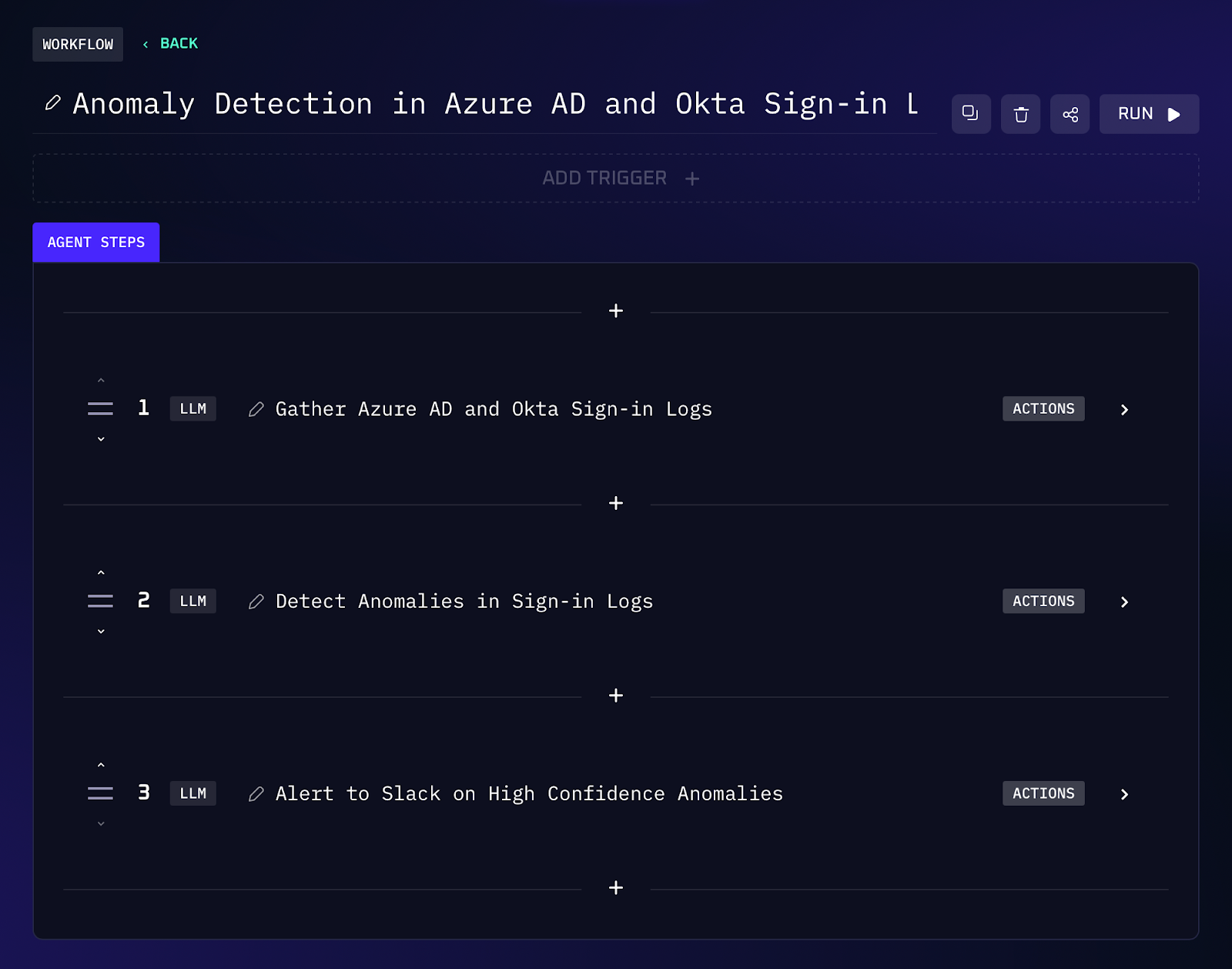

3. Monitor and Detect Anomalous Login Attempts

Credential compromise is a common breach vector, so keeping an eye on login activity is important. In a typical setup, an engineer might rely on predefined alerts from identity providers or manually review logs for suspicious sign-ins – like a user logging in from two countries an hour apart (“impossible travel”) or repeated failed logins. An AI workflow can continuously watch authentication logs across systems and detect anomalies in real time. Imagine telling the assistant: “Monitor our Azure AD and Okta login logs and alert me in Slack if something looks off, like impossible travel or logins from blacklisted IPs.” Kindo’s agent will ingest the sign-in events stream, apply anomaly detection (including enriching IP addresses with context from GreyNoise or similar services), and notify the team when a high confidence suspicious login is detected.

Workflow Steps (Login Anomaly Detection)

1. The agent connects to identity and access management systems such as Azure Active Directory and Okta, continuously pulling sign-in events (successes and failures) as they occur. It aggregates logins by user, origin IP, geolocation, device, and time.

2. The AI analyzes patterns that could indicate compromise: for example, a single account attempting logins from widely different geographic locations within a short time frame (impossible travel), a surge in failed password attempts for one account, or logins from new locations/devices for a user who typically has a known pattern. It also checks each source IP against threat intelligence (using a service like GreyNoise to distinguish benign internet “background noise” from potentially targeted scanners or known malicious hosts).

3. When the model’s confidence in an anomaly exceeds a defined threshold (say 70%), the workflow takes action. The agent can send an immediate alert via Slack or email with details of the suspicious activity (e.g. “Alert: Possible compromised account – User jsmith logged in from France and then USA within 30 minutes. Source IP 203.0.113.5 flagged by GreyNoise as a malicious scanner.”). Optionally, the workflow could even initiate protective measures such as locking the account or triggering multi-factor authentication, depending on your automation policies.

4. All this happens proactively, so the security team is notified within seconds of a suspicious login pattern, rather than finding out hours later in a log review.

Value of Automation

AI powered monitoring means potential account breaches are caught much faster than with periodic manual checks. By correlating login data across multiple platforms and enriching it with threat intel on IP addresses, the system reduces false positives and noise – the team sees alerts only when there’s a real reason to investigate. This not only saves time (analysts aren’t staring at logs all day) but also strengthens defense: quick detection of unusual logins can be the difference between stopping an attack early or suffering a serious incident.

4. Investigate Phishing Emails with Attachments

Phishing remains one of the top causes of security incidents, and SOC analysts spend a lot of time investigating reported suspicious emails. Typically, an analyst would manually inspect the email headers, scan any attachments in sandboxes, check URLs against blocklists, and then summarize the findings. With an AI assistant, much of this triage can be handled instantly. For example, upon receiving a forwarded phishing email report, you might ask: “Analyze this email, who is the sender, are there any malicious links or attachments, and what should we do about it?” The agent (leveraging Kindo’s Deep Hat LLM tuned for security analysis) will extract the sender information, any URLs or attachments, analyze them for threats, and produce a concise report with a verdict and recommended actions.

Workflow Steps (Phishing Email Analysis)

1. The workflow kicks off when a suspected phishing email is provided to the agent (either via integration with an email reporting plugin or by pasting the email content including headers). The agent parses the email, extracting key details: sender address and domain, subject, body text, URLs in the content, and any attachments (which it can detonate in a safe environment or inspect if it’s a text-based attachment like an HTML or script).

2. Kindo’s Deep Hat LLM analyzes the content and artifacts. The LLM can interpret the email’s language and intent (e.g. does it sound like a phishing lure, what is it trying to get the user to do?), while other tools check technical indicators.

3. The agent then synthesizes a brief summary of findings. For instance, it might output: “Verdict: Likely Phishing. The email claims to be from ‘Office365 Support’ but the sender domain was spoofed (off1cesupport.com). It urges the user to click a link that leads to a credential harvesting page. Indicators: 1 malicious URL (hxxp://loginverification[.]com/...) attachment UpdateInvoice.pdf contains an embedded script. Recommended Actions: Block the sender domain, add the URL to the blocklist, and alert users who received similar emails to ignore or delete it.”

4. The results are shared in the chat or forwarded to the ticketing system, so the SOC analyst or incident responder has an immediate, AI curated report. They can then quickly take action (blocking, user notification) without performing the time consuming analysis manually.

Value of Automation

Automating phishing investigation shortens the time from email reporting to action. Instead of spending an hour dissecting a single suspicious email, analysts get an instant summary with relevant indicators. This not only improves the SOC’s throughput (more emails investigated in less time) but also consistency, the AI applies the same thorough checks every time, whereas humans might occasionally miss a subtle indicator. The use of an LLM like Deep Hat means even the tricky social engineering context (tone, urgency, content of the email) is considered in the verdict.

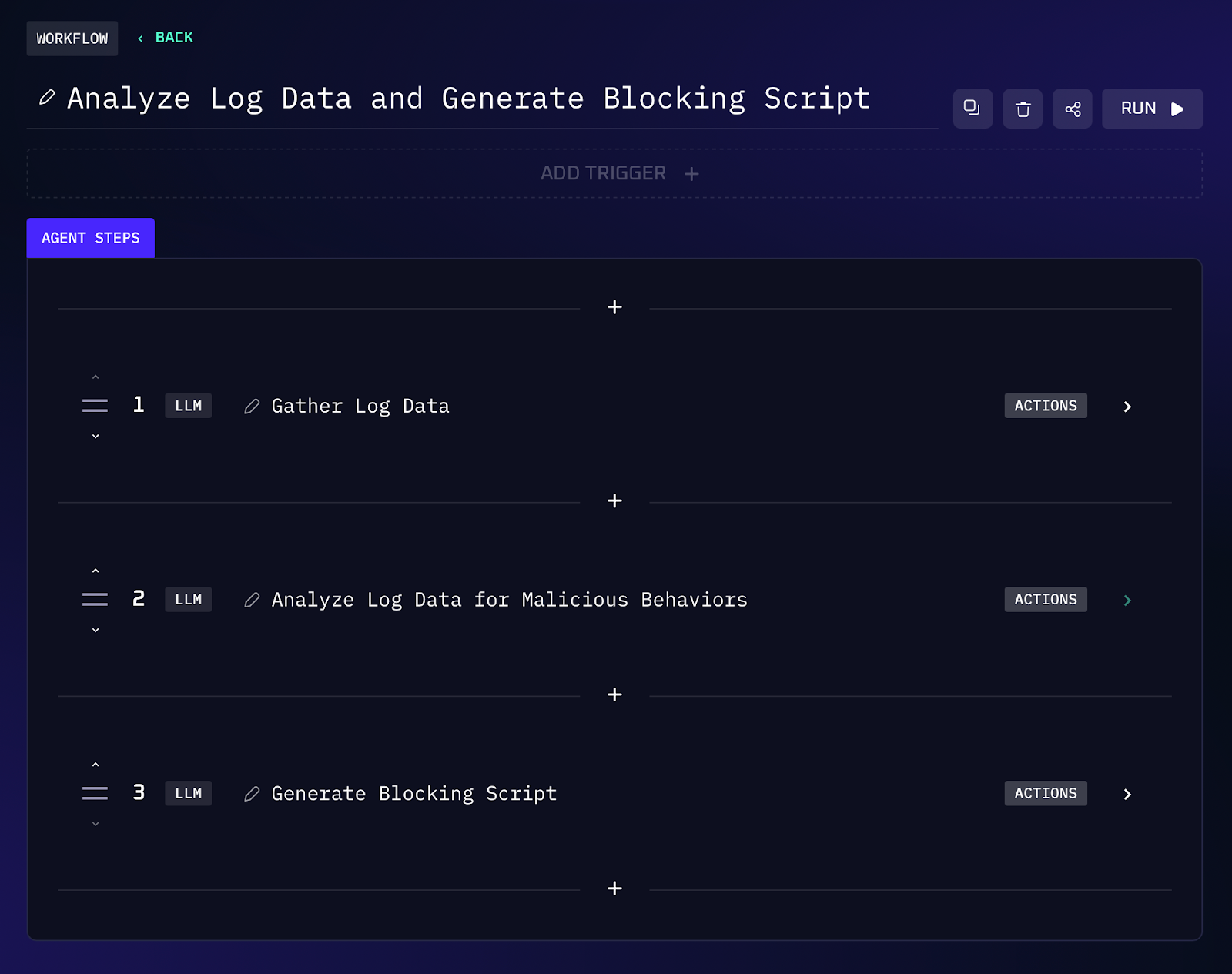

5. AI Assisted Script Creation for Remediation

When an incident is detected, responding often involves implementing tactical fixes or mitigations quickly. This might mean writing a script to block an IP across firewalls, kill a malicious process on endpoints, or collect specific logs for further analysis. Writing and testing such scripts under pressure can be error prone and slow. Here’s where AI can take the wheel: you could tell the AI, “Given this new malware behavior we found in the logs, generate a script to isolate affected machines and block the attacker’s IPs.” The AI agent will analyze the incident data or logs for patterns (like malicious IPs, file names, registry changes), then automatically draft a Python or PowerShell script that applies the necessary countermeasures.

Workflow Steps (Automated Script Generation)

1. The agent is triggered with context about the incident or new log data. For example, after identifying malicious IP addresses and processes from an attack, you prompt the AI with details (or it pulls them from the timeline and IOCs identified earlier).

2. Kindo’s AI analyzes malicious behavior patterns. It decides what actions a script should take to mitigate or prevent recurrence. For instance, if multiple failed logins from certain IPs were observed, the action might be to add those IPs to a firewall block list; if malware ran a specific process or created a rogue user account, the action might be to kill that process and disable the account across systems.

3. The AI then generates a script in the appropriate language (PowerShell for Windows environments, Bash or Python for cross platform) to implement those actions.

4. The draft script is presented in chat for review. It includes comments explaining what it does (e.g., “# This script disables the user account and adds IPs to firewall rules”). The security engineer can quickly validate or tweak the code if needed.

Value of Automation

Having AI write your containment and remediation scripts means defenses go up faster. Instead of writing boilerplate code from scratch during an incident (and potentially making mistakes in haste), responders get a head start with an auto generated solution. This not only reduces the time to respond (MTTR) but also captures expert knowledge, the AI, trained on countless scenarios, might include steps a less experienced responder wouldn’t think of.

Move Towards Conversational Security Operations

Each of these five actions show a shift in how SecOps teams can handle incidents: moving away from labor intensive, siloed processes toward a faster, unified, and conversational workflow. Instead of analysts adapting to the quirks and interfaces of dozens of tools, the AI adapts to your needs, fetching data, correlating information, and executing changes across systems at your command in natural language.

The outcome is faster detection and response times, fewer mistakes caused by fatigue or oversight, and a more transparent process that the whole team can follow in real time. Kindo’s security workflows exemplify this evolution by blending deep integrations with an agentic AI that works across your logs, platforms, and tickets like a tireless virtual analyst. It’s a move towards a more autonomous security operations approach, where mundane tasks are handled by AI, and human experts can focus on strategic defense and analysis.

Check out Kindo.ai to learn more about how these AI powered workflows can strengthen your incident response, or book a demo to see them in action.