.jpg)

How Adversarial AI Helps Security Teams Build Resilience

Every day, cybercriminals are coming up with new ways to leverage AI to make their attacks even worse, like automating phishing campaigns or making polymorphic malware.

Because of this, security teams can no longer afford to just react to things as they happen. For protection, you need to think like a threat. This means giving your team AI tools that are just as strong as the ones the attackers have.

In this post, we'll talk about why having an adversarial mindset is important for resilience, how AI can be a friendly adversary, and how we at Kindo keep this vision front and centre with our own AI and agentic platform.

Why Thinking Like a Threat Builds Resilience

Security experts have long said that red teaming, or testing your own systems like a cybercriminal would, is important, and it's easy to see why: to strengthen your defenses, you need to know how they will be attacked. This kind of thinking is even more important now that attackers are using AI as a weapon.

Recent research shows that cybercriminals often use AI tools, even free ones like ChatGPT, to create phishing campaigns that look real and to improve their attack strategies. Adversarial thinking forces you to view your attack surface the way an attacker would:

• Which parts of our public systems are most vulnerable to attacks?

• How might someone combine small bugs to create a big security threat?

• Are we at risk from a CVE or newly discovered 0day vulnerability?

By exploring these questions, defenders can fix issues proactively and rehearse responses to potential incidents. It’s the same principle as stress testing a bridge or running fire drills – by intentionally exposing yourself to risk in a controlled way, you toughen your systems and teams to handle real crises. When you embrace this mindset, security lapses become lessons.

How AI as an Adversary Enables Resilience

So, how can AI actually help defenders think like attackers? The answer lies in AI’s ability to learn, simulate, and scale adversarial techniques in ways humans simply can’t match on their own. Well tuned security AI can comb through data, generate exploits, and probe systems with the creativity of a human cybercriminal, only much faster.

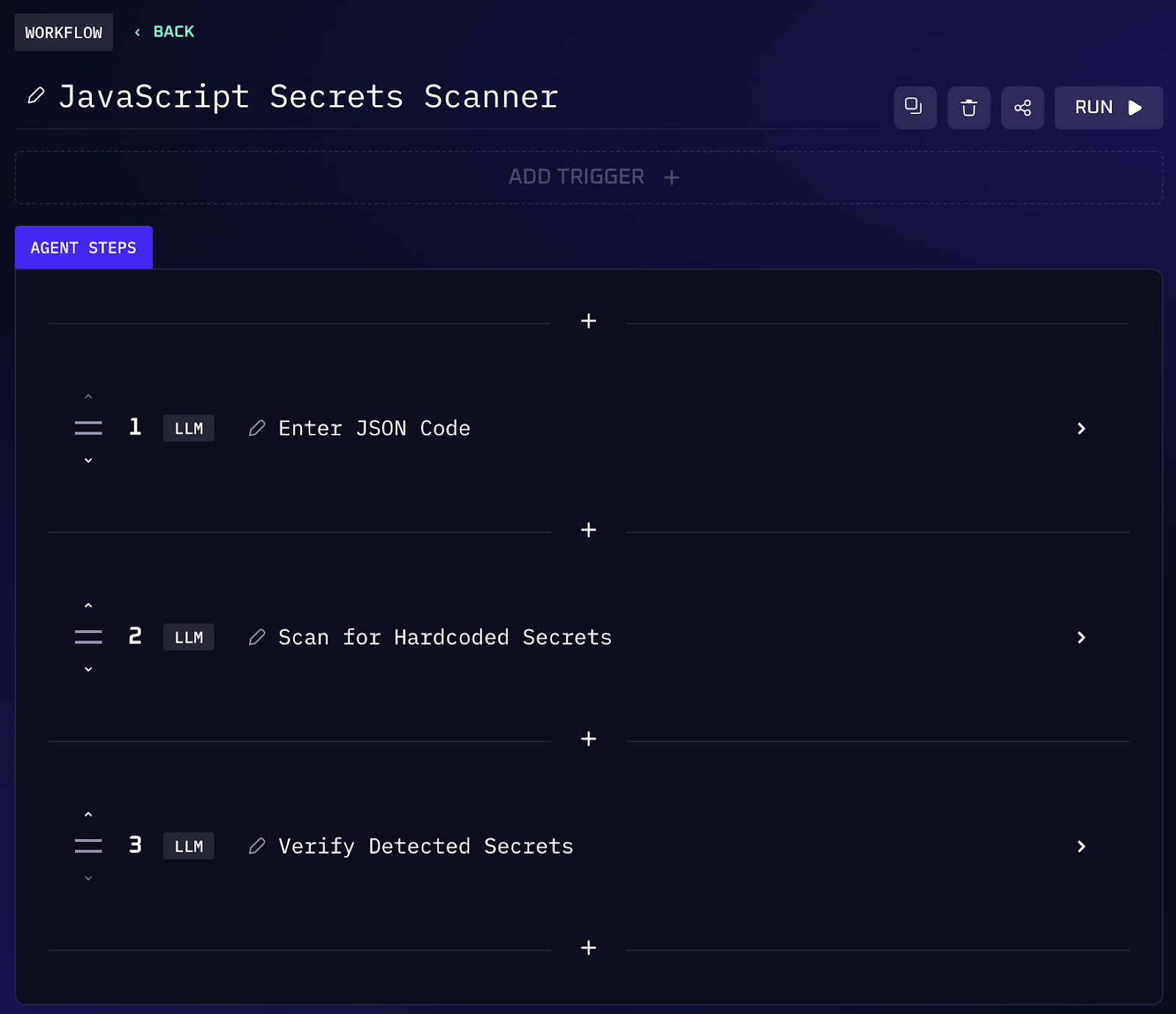

Think about some of the tasks that a cybercriminal might perform, and imagine turning those against your own environment for good. For instance, an attacker looking through your public code for hardcoded secrets is a genuine threat. AI can do that same job for you ahead of time.

We've set up workflows where our AI agent scans all the JavaScript and JSON files in a web app to find any API keys or credentials that developers may have accidentally left in the code. This kind of automated audit can find hidden tokens in seconds. Whereas, if a cybercriminal found them first, it would be an instant breach.

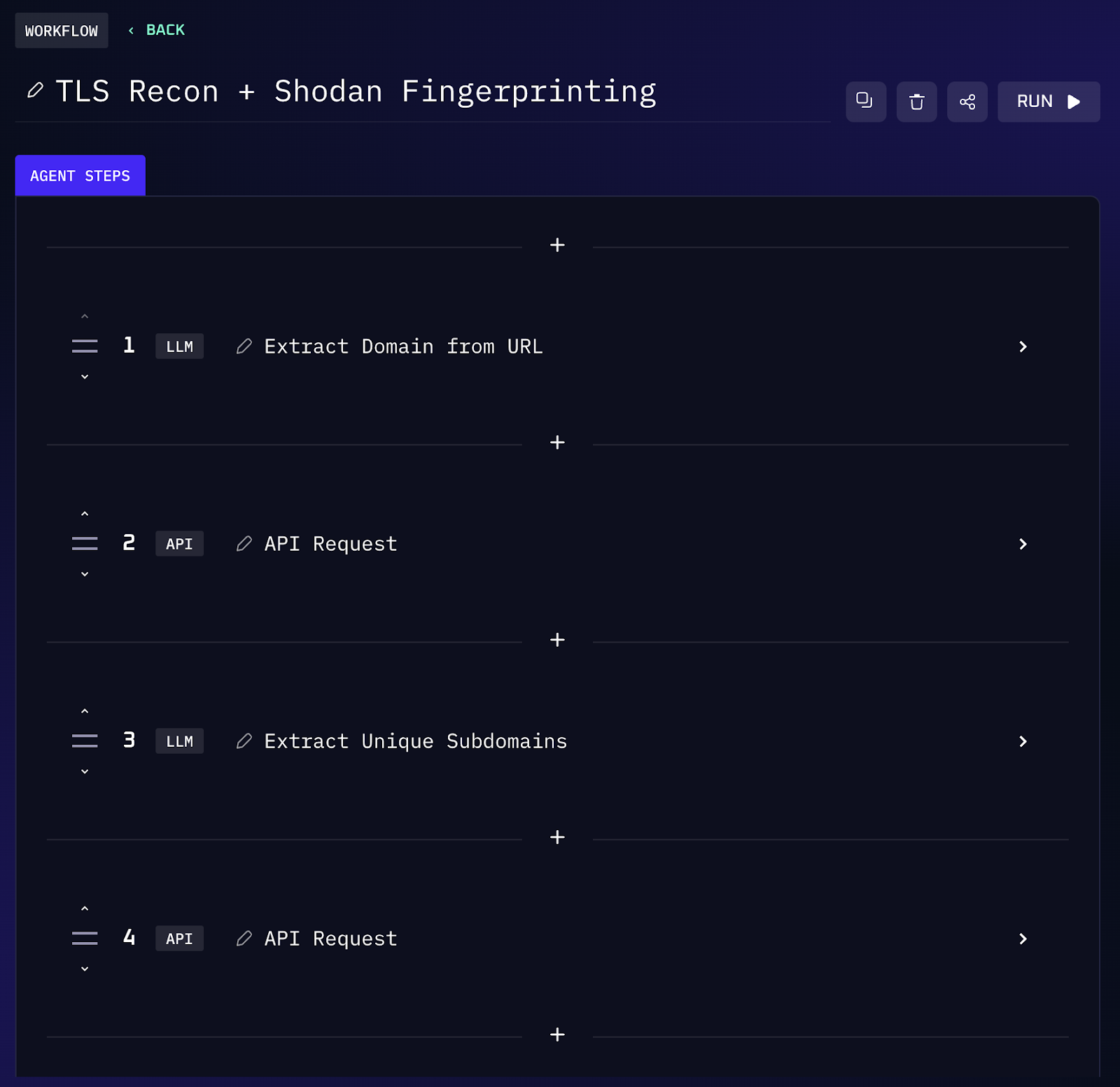

AI can also enumerate your attack surface with a cybercriminal's mindset. In the wild, attackers often trawl through leaked credential databases, certificate transparency logs, and services like Shodan to map out targets. This same methodology can be leveraged defensively.

An adversarial AI agent might automatically monitor certificate transparency logs for newly issued subdomains of your company and then query Shodan for open ports or services on those hosts.

The AI is basically conducting recon on your organization just like an external threat actor would, but every finding goes to you, the defender. If a forgotten test server or misconfigured database instance pops up, you’ll know immediately and can remediate the issue, rather than leaving an attacker to find it first.

Perhaps one of the most powerful aspects of AI in this role is its ability to generate and test exploits.

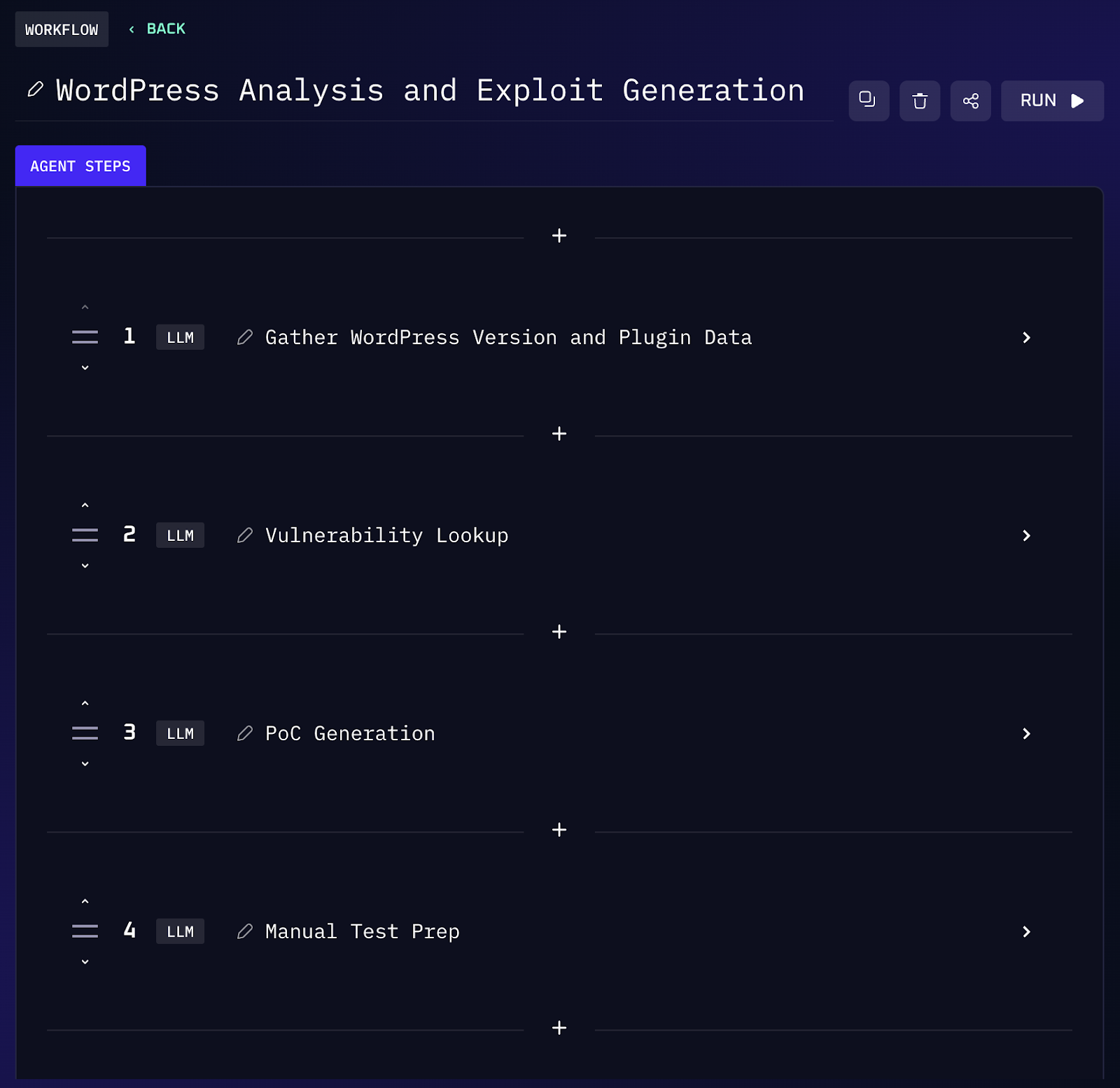

Traditionally, when a new vulnerability (say a fresh CVE) is announced, attackers rush to reverse engineer it and develop exploits, while defenders rush to patch. With the right AI, you don’t have to purely play catch up, your AI can help you play offense on your own systems.

Generative AI models can be instructed to create proof-of-concept exploit code based on a vulnerability description, effectively reproducing what a skilled cybercriminal might do, but as an aid to the security team.

This isn’t about causing damage; it’s about anticipating damage. If your AI can generate a working exploit in minutes, you can verify whether your systems are actually vulnerable and prioritize patching accordingly, long before an adversary deploys a similar exploit maliciously.

Keeping Adversarial AI on Your Side

Keeping this AI capability, which is often used against us, on our side has been at the heart of what we do at Kindo since the beginning. From the start, we recognized that if cybercriminals were using AI to attack, defenders needed their own AI ally, one that thinks like an attacker but is entirely controlled by the security team.

We have a two part answer to this:

Our adversarially-trained large language model and the Kindo platform that supports it. They work together to make an AI red team assistant that has all the safety features it needs to work safely in an enterprise environment.

Kindo’s LLM is essentially AI designed for security tasks. Unlike generic AI, it has been trained on a vast corpus of DevOps, SecOps, and threat intelligence data. It’s an AI that was trained on reading exploit code, configuration files, and incident reports instead of just Wikipedia.

This specialization means that Kindo and our LLM can dive into tasks like analyzing code for vulnerabilities, interpreting logs for signs of compromise, or enumerating cloud configurations for misconfigurations with an expert’s perspective.

Of course, giving an AI the capability and access to act like an attacker in your environment sounds scary without the correct safeguards. That’s where Kindo’s platform comes in, turning AI power into controlled, auditable workflows.

We’ve built Kindo as an agentic AI platform, in plain terms, a system where AI agents don’t just chat about problems but can take action, all under strict oversight. Each AI driven operation runs in a sandboxed, step-by-step workflow that you define and approve.

You might connect an agent to external tools or data sources (APIs for things like Shodan, VirusTotal, cloud services, etc.), and then let the AI orchestrate a sequence of actions. But nothing is left to chance: you set the objectives, permissions, and boundaries. Every action the AI takes, whether it’s scanning an IP range, pulling data from an API, or executing a script, is logged and traceable.

We’ve also baked in role based access controls, policy guardrails, and human check points where you want them. The goal is to enable autonomy with accountability. Think of it like a highly skilled (and somewhat superhuman) team member that still follows your team’s rules and reports back on everything it does.

Ready to Think Like a Threat?

Attackers don't wait for alerts. They think outside the box, move quickly, and take advantage of vulnerabilities that others miss. Defenders need to act the same way. Kindo gives your team an adversarial edge, by letting you simulate threats, scan your own environment like an attacker would, and automate the fixes.

You can get rid of separate tools and manual testing by using Kindo and our LLM. Most teams see their tools cut by up to 80% and their speed, accuracy, and resilience go through the roof.

If you're ready to think like a threat, and act faster than one, request a demo.

We'll show you how Kindo helps security leaders operationalize adversarial thinking at scale, without sacrificing oversight or control. It’s not just AI for automation. It’s AI that works for operators.