.jpg)

Compounding Advantage: Why AI's Winners Build Control Planes

AI isn’t a product category. It’s a platform reset. Every time compute changes, the control plane moves. Whoever captures that layer compounds advantage across everything else.

That’s the point of this piece. The winners won’t be whoever ships the flashiest chatbot or biggest demo. They’ll be the ones who turn AI into an operational system that compounds, where governance, model choice, and execution live in the same loop.

In my “How AI is Reshaping the Enterprise: A Wake-Up Call for Leaders” talk, I argued that the center of gravity always moves to whoever turns new compute into durable operating advantage. In “Trust First: Choosing AI You Can Actually Live With,” I argued that advantage only lasts when governance is designed in from the start. This essay connects both: how control, trust, and compounding value come together to define the next era of enterprise software.

The uncomfortable truth about model choice and where your data lives

Enterprises still default to a single lab because it’s convenient, but there’s a strategy tax with this. Price performance leadership shifts month to month. Open-source models are now strong enough for real work across many tasks, and when you can run them in your own environment you reduce exposure to AI labs competing with you for revenue, legal discovery from retention orders, and third parties publishing meta-analytics about your usage patterns. In “Trust First” I asked leaders to treat model routing, data residency, retention, and audit as board-level questions. That wasn’t theory. It’s the only way to build a foundation you can live and comply with when regulations and lawsuits arrive on the AI labs’ timeline, not yours.

GPUs changed the game, not just the model names

We’re living through a total platform flip from CPUs to GPUs. In past platform shifts, a 100,000x improvement was enough to rewrite how business ran. We’re already past that in GPU price performance, now a billion times more efficient. That’s why the market’s capital spend curves point up and to the right. It’s also why constraint is moving from chips to power. Even with major gains in tokens per watt, you still need electricity to turn tokens into answers. Inference is the new load. Test-time compute grows as you ask for higher quality and longer think times. If you want full-time AI copilots that can actually shoulder valuable work, your IT budget is about to look like a power plan.

The moat is operational. Advantage will not come from who has the most GPUs or the latest model contract. It will come from how intelligently you use what you already control. Capacity you can count on, models you can swap, and verification that cuts review time. Do that and your cost per outcome keeps dropping. Over time, that operational flywheel of faster resolution, cleaner evidence, and lower marginal cost per action becomes the compounder that competitors cannot copy with budget alone.

AI agents are real, but they aren’t magic

Venture dollars are pouring into agents for every function in the business. That’s good. It’s also how you end up with hundreds of brittle bots that no one governs. Agents only become enterprise durable when they are embedded in a system that can plan work, run work, verify results, protect secrets, and write everything to an audit log. Whenever I talk at conferences or even to customers, I show why this loop matters for technical operations. It’s not enough to call tools. You need policy boundaries, environment isolation, model routing, DLP filtering, secrets handling, and after-action evidence that stands up to scrutiny. Otherwise you just move tribal automation from humans to scripts with a chat UI.

The three levers of compounding enterprise advantage

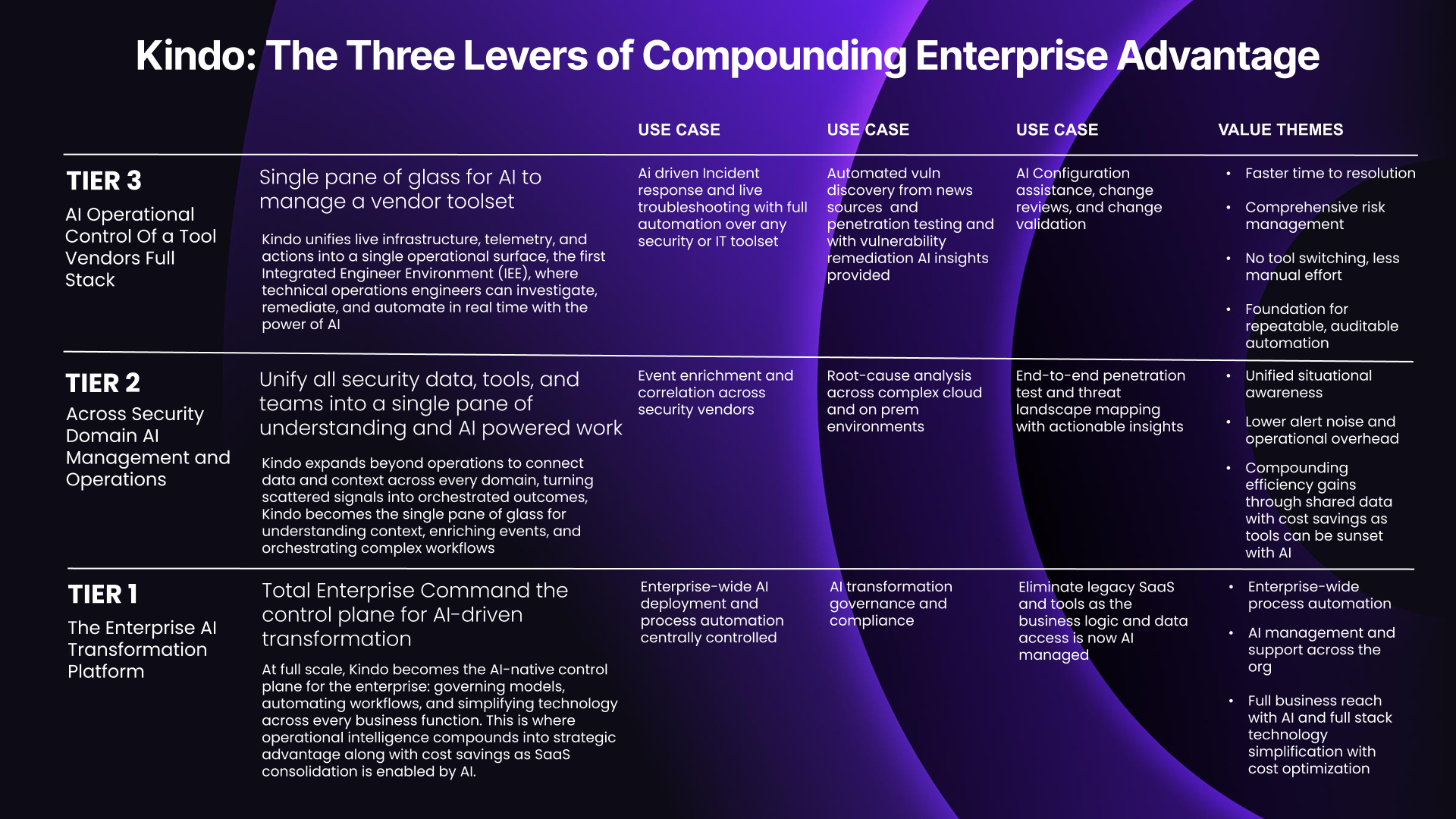

I created a framework that reflects how technical operations typically mature when using Kindo: from isolated tools to unified visibility to AI-driven control. The gains multiply when the progression is intentional.

Tier 3: AI Operational Control of a Tool Stack

This is where the flywheel starts turning. Kindo becomes the single pane of glass for AI to manage your operational stack. Live telemetry, automation, and model reasoning come together inside the Integrated Engineer Environment (IEE), allowing engineers to investigate, remediate, and optimize without leaving their environment. The focus is execution: resolving incidents faster, validating changes safely, and hardening configurations continuously.

Tier 2: Cross-Domain AI Management and Operations

Once individual tools and workflows are under control, the next step is connecting them. Kindo unifies telemetry, alerts, and context across security, Dev, and IT domains to create a shared operational picture. AI correlates events, enriches data, and turns fragmented signals into actionable understanding. At this stage, teams move beyond tool-level fixes to organization-wide intelligence, lowering noise, reducing duplication, and compounding value through shared context.

Tier 1: The Enterprise AI Transformation Platform

At full scale, Kindo operates as the AI-native control plane for the enterprise. This is where transformation compounds. Governance, automation, and data access converge into one command layer that standardizes policy, drives compliance, and eliminates redundant systems. AI becomes the connective tissue of the business itself, managing logic, orchestrating workflows, and consolidating tools across every function. The result is measurable efficiency, reduced complexity, and full business readiness for the AI era.

The Physics of Trust

Once operations start compounding, trust and efficiency collapse into the same problem. You can’t separate governance from cost or compliance from compute. Every token, watt, and log entry becomes part of how accountable your system really is.

Where models run, who sees prompts, and how long artifacts persist all have direct cost and regulatory weight. The same architecture that routes to the cheapest model or local GPU should also enforce audit trails and policy. If you can’t replay what happened six months ago and get the same result, you don’t have governance — you have drift.

AI now lives at the intersection of trust and throughput. The winners will design for both: systems that optimize for energy, accuracy, and evidence at once.

What this means for enterprise teams

Elastic model strategy beats vendor loyalty. Route per task. Keep your options open. Power planning now belongs to infrastructure and ops leaders. Budget for inference like you budget for compute.

Agent sprawl is coming. Without a control plane, you’ll recreate the same tribal automation you were trying to escape.

Verification becomes the trust layer. Every action, scan, or decision must be reproducible, explainable, and independently verifiable. That is how you build confidence between teams and regulators, not through dashboards or decks.

Start where pain is highest. Prove value in technical operations, compound into cross-domain intelligence, and finish with enterprise-wide governance. One path from incidents to outcomes.

Where Kindo and Deep Hat fit

Kindo is the Integrated Engineer Environment, a single AI-native terminal where Security, Dev, and IT operators build, run, and verify real work across their stack. From there it compounds into cross-domain intelligence and lands as an enterprise control plane with centralized governance.

At its core is Deep Hat, Kindo’s open DevSecOps model trained on real infrastructure and security data. It runs fully in your environment and speaks your tools without sending data outside your walls. Deep Hat powers each tier with domain-tuned reasoning while keeping data private and inference local.

Nobody needs more features. Teams need a way to turn new compute into reliable outcomes. That’s the compounding advantage. Build the control plane, and the rest follows.